Investigating Transparency Methods in a Robot Word-Learning System and Their Effects on Human Teaching Behaviors

Abstract

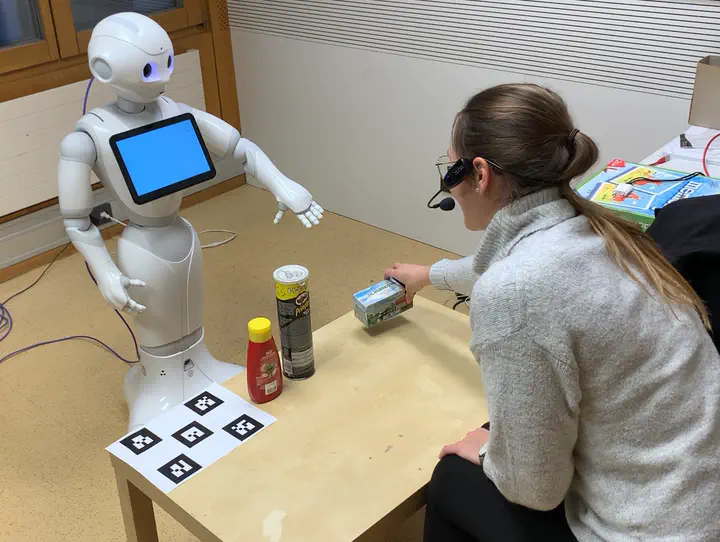

Robots need to understand words for references in social spaces (e.g., objects, locations, actions). Grounded language learning systems aim to learn these words from observing a human tutor. Teaching a robot is difficult for naive users due to the discrepancy between the users’ mental model and the actual state of the robot. We introduce a grounded word-learning system with the Pepper robot which learns object and action labels and investigate two extensions geared towards increasing the system’s transparency. The first extension utilizes deictic gestures (pointing and gaze) to communicate knowledge about object names, and to further request new labels. The second extension shows the current state of the lexicon on the robot’s tablet. We performed a user study (n=32) to investigate the effects of the transparency methods on learning performance and teaching behavior. In a quantitative analysis, we did not see a significant performance increase for the two extensions. However, users reported higher perception of control and perceived learning success, the better they knew the current state of the learning system. In a qualitative analysis, we investigated the participants’ teaching behaviors and identified factors that inhibited the learning process. Among other things, we found increased interactive behavior of users when the robot displayed deictic gestures. We saw that human tutors simplified their utterances over time to adapt to the perceived capabilities of the robot. The tablet was most helpful for users to understand what the robot had already learned. However, learning was impaired in all conditions, when the human input substantially deviated from the form required by the learning system.